welcome to the TutBig today I will show you Custom Robots Txt Generator for Blogger Maybe you have heard about the term robots.txt. What is robots.txt? is it necessary in the settings? what if I go away it alone? There are possibly many different questions you have, especially if you are newbie blogger.

If you want to get your blog listed and crawl your pages quickly then you must add this custom robots.txt file in your blogger blog. as well as it's a section of search engine optimization so you should be aware of the terms.

How It Works?

Robots.txt is a command for the search engine robots to explore or browse pages of our blog. Robots.txt has arguably filtered our weblog from search engines.

Let's say robotic wants to visits a Webpage URL, example, http://www.example.com/about.html. Before it does so, it will take a look at for http://www.example.com/robots.txt, and then it will get admission to the particular webpage.

All the blogs already have a robots.txt file given by means of blogger/Blogspot. By default robots.txt on blogs like this:

User-agent: Media partners-GoogleDisallow:User-agent: *Disallow: /searchAllow: /Sitemap:http://yourblogname.com/atom.xml?redirect=false&start-index=1&max-results=500

What Is The Meaning Of Above Codes?

User-agent: Media partners-Google

This command tells your blog to allow Adsense bots to crawl your blog. If you’re not using Google Adsense on your blog then simply remove this line.

Disallow:

This command prevents Search Engine bots from crawling pages on your blog.

User-agent: *

All Robot Search Engines / Search engine

Disallow: / search

Not allowed to crawl the search folder, like... / search / label and ... search/search? Updated ... here label is not inserted to search because the label is not a URL who estate towards one specific page.

Example :

http://www.tutbig.blogspot.com/search/label/SEOhttp://www.tutbig.blogspot.com/search/SEO

Allow: /

This command tells allow all pages to be crawled, except that written on Disallow above. Mark (/) or less.

Sitemap:

Sitemap:http://yourblogname.com/atom.xml?redirect=false&start-index=1&max-results=500

How To Prevent Robot On Certain Pages?

To prevent the particular page from Google crawling just disallow this page using Disallow command. For example: if I don't want to index mine about me page in search engines. simply I will paste the code Disallow: /p/about-me.html right after Disallow: /search.

The code will look like this:.

User-agent:Mediapartners-GoogleDisallow:User-agent:*Disallow: /searchDisallow:/p/about-me.htmlAllow: /Sitemap:http://yourblogname.com/atom.xml?redirect=false&start-index=1&max-results=500

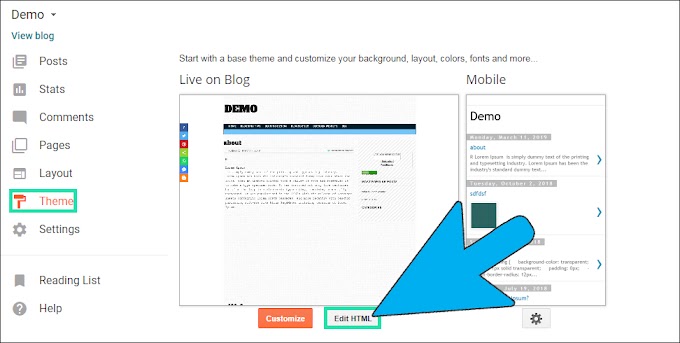

How To Add Custom Robots.txt File In Blogger?

Note: Before adding a custom robots.txt file in blogger you should keep one thing in your mind that if you are using robots.txt file incorrectly then your entire blog being ignored by search engines.

Step1

Go to your Blogger Dashboard

Step2

Now go to Settings >> Search Preferences >> Crawlers and indexing >> Custom robots.txt >> Edit >> Yes

Step3

Now paste your custom robots.txt file code in the box.

Step4

Now click on the Save Changes button.

Step5

That's all, You are done! now you can see your robot.txt file. To view the robots.txt fine, please type in the browser...

http://yourblogname.com/robots.txt

It's okay, your blog will still be crawled by search engine robots because as I mentioned before, every blog already possessed default robots.txt.

I hope you enjoy this post and the photos. Custom Robots Txt Generator for Blogger